In the realm of Artificial Intelligence (AI), supercomputers are increasingly vital. These immense machines now serve as the foundation of AI research, providing unmatched processing capabilities and unlocking avenues for innovative possibilities.

Supercomputers are revolutionizing the AI landscape through advanced parallel processing and optimized architectures, significantly expediting tasks like training, inferencing, and optimization. Leveraging parallel processing, these powerful machines break down complex AI tasks into smaller, more manageable segments, facilitating simultaneous processing by multiple processors. This translates to quicker execution times, reducing the learning time for AI models from extensive datasets.

The AI supercomputer market is forecasted to grow from USD 1.2 billion in 2023 to USD 3.3 billion by 2028 – a CAGR of 22.0% – Markets + Market.

The AI supercomputer market is forecasted to grow from USD 1.2 billion in 2023 to USD 3.3 billion by 2028, with a robust CAGR of 22.0%. This growth is primarily driven by the increasing utilization of AI supercomputers in the global healthcare sector. It significantly contributes to expediting drug discovery by simulating molecular interactions, predicting potential drug candidates, and modeling compound behavior.

Major players in the AI supercomputer market consist of NVIDIA Corporation (US), Intel Corporation (US), Advanced Micro Devices, Inc. (US), Samsung Electronics (South Korea), and Micron Technology, Inc. Lucrative prospects for these market leaders are anticipated in the coming five years, driven by the anticipation of new product launches and advancements within the industry.

Venturing beyond mere computational speed, incorporating AI into the forefront of supercomputing heralds a transformative epoch in technological innovation. This blog post aims to guide you through the intricate terrain where AI intersects with supercomputing, revealing unexplored dimensions of capabilities. From diverse applications to tangible real-world examples, we aim to offer insights into future trends and the myriad opportunities that this convergence presents.

Supercomputing Technology

The development of supercomputing extends over many years, tracing its roots to the operational use of the Colossus machine at Bletchley Park in the 1940s. Designed by Tommy Flowers, a research telephone engineer at the General Post Office (GPO), the Colossus marked a crucial milestone as the inaugural functional, electronic, digital computer.

Over time, supercomputing has evolved into specialized computing systems made up of interconnects, I/O systems, and memory and processor cores designed to meet the colossal computational requirements of AI. Unlike traditional computers, supercomputers utilize multiple central processing units (CPUs), which are organized into compute nodes.

These nodes can include a processor or a group of processors using symmetric multiprocessing (SMP) and a memory block. On a large scale, a supercomputer may encompass tens of thousands of nodes, and these nodes can collaborate on solving specific problems through interconnecting communication. Additionally, nodes use interconnects to communicate with I/O systems, including data storage and networking.

Its performance is measured in floating-point operations per second (FLOPS). Petaflops, equivalent to a thousand trillion flops, serve as a measure of a computer’s processing speed. A 1-petaflop computer system can perform one quadrillion (10^15) flops. From a different perspective, supercomputers can exhibit processing power one million times greater than the fastest laptop.

- Supercomputing vs. HPC – “Supercomputing” is occasionally used interchangeably with other computing types. While supercomputing generally denotes the execution of intricate and extensive calculations by supercomputers, high-performance computing (HPC) involves the utilization of multiple supercomputers to process complex and large calculations. These terms are often used interchangeably.

- Supercomputing vs. Parallel Computing – Supercomputers are also occasionally referred to as parallel computers because supercomputing involves parallel processing. Parallel processing occurs when multiple CPUs collaborate to solve a single calculation simultaneously. However, HPC scenarios also employ parallelism without the necessity of using a supercomputer.

(On the impact of Supercomputing) “These colossal machines have become the backbone of AI research, offering unparalleled processing power and opening doors to new possibilities.” – Datacenters.com

Impact of AI in Supercomputing

Critical to scientific breakthroughs, supercomputers play a pivotal role in tasks like combating cancer, discovering next-generation materials, understanding disease patterns, simulating nuclear reactions, modeling climate change, and decoding genomic sequences. With unmatched capabilities in processing and analyzing extensive datasets, these computational powerhouses empower researchers to address challenges that were once considered unattainable.

Supercomputing utilizes a robust computer to minimize the time required for problem-solving. Its synergy with AI is a force multiplier, enabling the concurrent execution of numerous tasks. Rather than dedicating a million computing cores to a single task, HPC divides the workload into smaller segments, akin to employing 10,000 cores for one aspect and another 10,000 for a different aspect. This approach resembles an intensified form of multitasking, enhancing computational efficiency.

The convergence of AI and supercomputing has unveiled novel frontiers. A notable example is the partnership between G42, the leading AI and cloud computing company in Abu Dhabi, UAE, and Cerebras Systems, a trailblazer in accelerating generative AI that pushes boundaries, advances AI into more complex models, and accelerates processing speeds.

With a targeted capacity of 36 exaFLOPs, this groundbreaking supercomputing network will fuel numerous commercial applications and AI advancements. The inaugural deployment of the network is Condor Galaxy 1 (CG-1), a 4 exaFLOP, 54 million core, cloud-based AI supercomputer, already available for the workload. Cerebras and G42 plan to roll out two additional supercomputers, CG-2 and CG-3, in the US by the end of the year.

CG-1 is designed to enable G42 and its cloud clientele to rapidly and effortlessly train large, groundbreaking models, thereby expediting innovation. The Cerebras-G42 strategic partnership has progressed the development of cutting-edge AI models in areas such as Arabic bilingual chat, healthcare, and climate studies.

“Collaborating with Cerebras to rapidly deliver the world’s fastest AI training supercomputer and laying the foundation for interconnecting a constellation of these supercomputers across the world has been enormously exciting. This partnership brings together Cerebras’ extraordinary compute capability, together with G42’s multi-industry AI expertise. G42 and Cerebras’s shared vision is that Condor Galaxy will be used to address society’s most pressing challenges across healthcare, energy, climate action and more,” -Talal Alkaissi, CEO of G42 Cloud, a subsidiary of G42.

Through meticulous alignment of hardware and software components, AI supercomputers can achieve remarkable performance enhancements. Following the completion of AI tasks, this optimization ensures swift and resource-efficient computation, conserving both time and energy.

Image credit: Cerebras / REUTERS – G42 and Cerebras Systems announced Condor Galaxy, a global network of nine interconnected AI supercomputers offering a new approach to AI compute.

Use cases of AI in Supercomputing

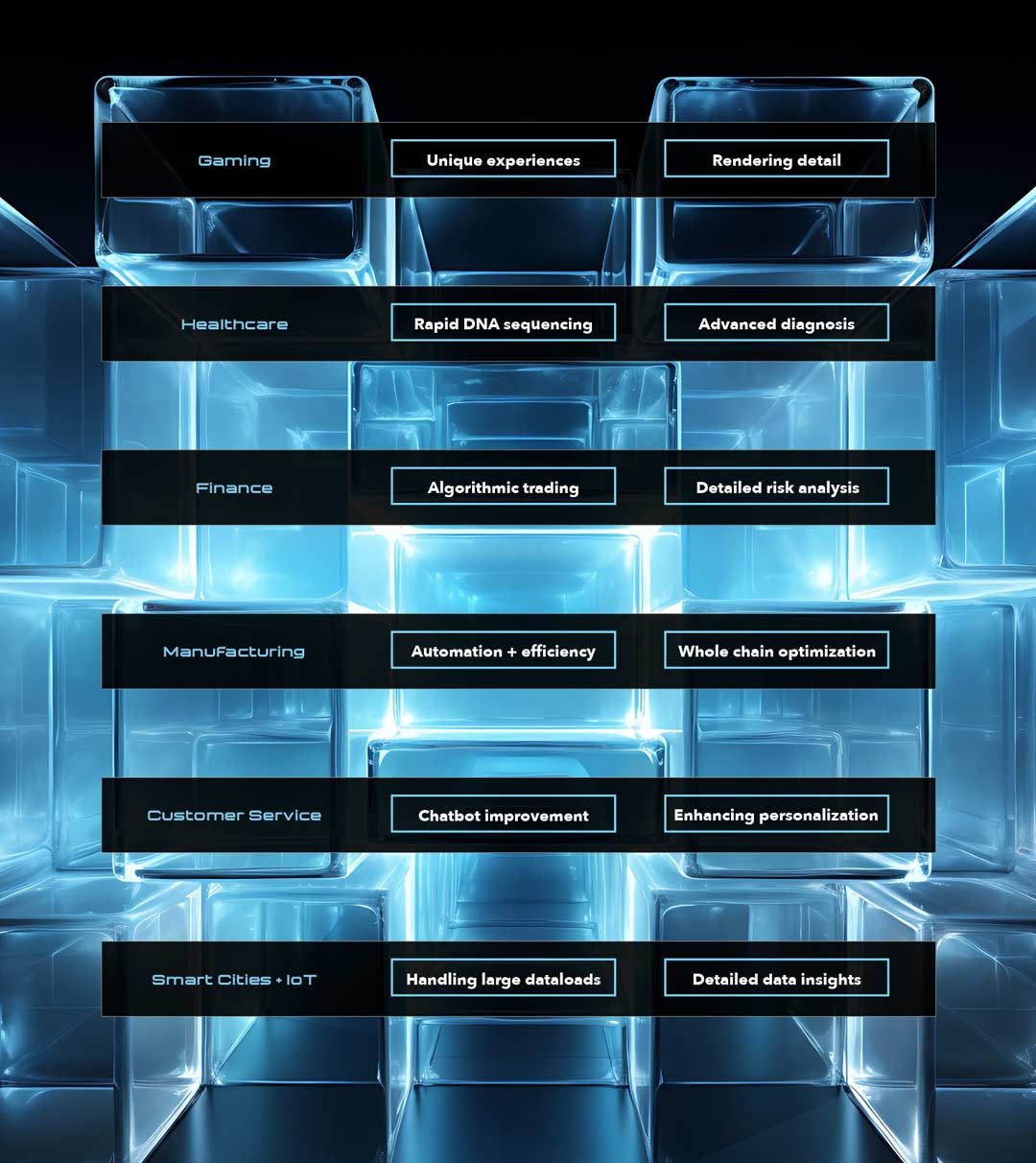

AI supercomputing finds applications across a spectrum of domains, encompassing gaming, healthcare, finance, manufacturing, customer service, and the advancement of smart cities. The utilization of the vast computational power inherent in supercomputers, coupled with the intelligence of AI, positions these applications for significant and transformative advancements.

AI Supercomputing Applications in Gaming

-

Revolutionizing the Gaming Experience – The integration of AI supercomputing has brought about a profound transformation in the gaming industry. AI-powered games are gaining popularity, offering players a dynamic and immersive gaming experience. Leveraging advanced algorithms, AI can adapt gameplay, introduce unique challenges, and personalize each player’s gaming journey.

-

Gaming Industry Transformations– AI’s impact extends beyond gameplay; it plays a crucial role in various aspects of game development, including character animations and graphics rendering. With the incorporation of AI supercomputing, the gaming industry can push the boundaries of creativity and realism, delivering games that captivate and engage players on an entirely new level.

AI Supercomputing Applications in Healthcare

-

Genomic Sequencing – Genomic sequencing, a form of molecular modeling, is a strategic approach for scientists to scrutinize a virus’s DNA sequence. This aids in disease diagnosis, the development of personalized treatments, and the tracking of viral mutations. Originally a time-intensive process that required a team of researchers 13 years to complete, supercomputers have now condensed complete DNA sequencing into a matter of hours.

-

AI for Medical Diagnosis – AI supercomputing has paved the way for more precise and efficient medical diagnoses. AI algorithms can analyze intricate medical data, such as MRI scans or genetic information, enabling the early detection of diseases and conditions. This capability enhances diagnostic precision and facilitates timely interventions.

-

Drug Discovery – Pharmaceutical companies leverage the prowess of AI supercomputing to expedite drug discovery processes. By analyzing extensive datasets and simulating molecular interactions, AI identifies potential drug candidates, significantly reducing the time and resources required for new drug development.

-

Enhancing Patient Care– AI-driven technologies improve patient care by optimizing treatment plans and predicting patient outcomes. From personalized treatment recommendations to predictive analytics, AI supercomputing holds the potential to revolutionize the healthcare industry, delivering enhanced care to patients.

AI Supercomputing Applications in Finance

-

Algorithmic Trading– The financial sector leverages AI supercomputing in algorithmic trading, where AI algorithms analyze real-time market data, execute trades, and efficiently identify profitable opportunities. This technology is reshaping the finance landscape by automating trading processes and minimizing human errors.

-

Risk Management– AI supercomputing significantly improves risk management in finance. Predictive analytics and machine learning algorithms empower financial institutions to assess and mitigate risks more effectively, safeguard investments, and ensure overall financial stability.

-

Personal Finance Assistance– At a personal level, AI-powered financial assistants offer individuals personalized financial advice. These digital tools assist users in managing investments, budgeting, and financial planning, simplifying the complexities of personal finance navigation.

AI Supercomputing Applications in Manufacturing

- Automation and Efficiency – Manufacturing processes witness increased automation and efficiency with the integration of AI supercomputing. AI-powered robots and machines execute intricate tasks with precision, diminishing production time and costs. This heightened level of automation results in higher-quality products and elevated productivity.

- Quality Control – AI supercomputing proves invaluable for quality control in manufacturing. It can promptly identify defects and anomalies in real-time, ensuring that only products meeting stringent quality standards reach the market. This not only minimizes waste but also fosters enhanced consumer trust.

- Supply Chain Optimization – The supply chain reaps the benefits of AI supercomputing through route optimization, demand prediction, and improved inventory management. By analyzing extensive datasets, AI algorithms enhance the agility, cost-effectiveness, and responsiveness of supply chains to market fluctuations.

AI Supercomputing Applications in Customer Service

- Chatbots and Virtual Assistants – AI-driven chatbots and virtual assistants bring about a revolutionary change in customer service. They adeptly respond to customer inquiries, resolve issues, and provide information round the clock. This automation elevates the customer experience by minimizing response times and ensuring consistency in service.

- Enhanced Customer Experience – Customers enjoy personalized experiences facilitated by AI, which analyzes customer data and preferences to offer tailored product recommendations, fostering a more engaging and satisfying shopping experience.

- Reducing Response Time – The increasing data processing capabilities of AI supercomputing result in reduced response times in customer service. Queries are promptly addressed, leading to heightened customer satisfaction.

AI Supercomputing Applications in Smart Cities and IoT Integration

- Building Smarter Cities – AI plays a crucial role in shaping smart cities by optimizing resource allocation and urban planning. AI supercomputing is instrumental in creating more sustainable and efficient urban environments, from traffic management to energy consumption.

- IoT and Infrastructure – The effectiveness of the Internet of Things (IoT) relies on AI supercomputing. AI processes the vast amount of data generated by IoT devices, facilitating efficient data analysis and decision-making. This integration results in smarter infrastructure and enhanced services for residents.

- Urban Planning and Resource Management – AI contributes to urban planning by providing valuable insights for resource allocation. It aids cities in managing resources such as water, electricity, and transportation more efficiently, promoting overall sustainability.

AI Supercomputing Other Use Cases

- Weather Forecasting and Climate Research – Supercomputers, fueled by numerical modeling data collected from satellites, buoys, radar, and weather balloons, empower field experts with enhanced insights into the impact of atmospheric conditions. This heightened understanding equips them to provide more informed advice to the public on weather-related matters, such as whether to bring a jacket or how to respond to a thunderstorm.

- Aviation Engineering – Supercomputing systems within the aviation sector play a crucial role in various applications, including solar flare detection, turbulence prediction, and the approximation of aeroelasticity—how aerodynamic loads impact an aircraft—to enhance aircraft design. Notably, GE Aerospace has enlisted the world’s fastest supercomputer, Frontier, to evaluate open fan engine architecture for the next generation of commercial aircraft. This advancement holds the potential to reduce carbon dioxide emissions significantly by more than 20 percent, showcasing the impactful contributions of supercomputing in aviation innovation.

- Space Exploration – Supercomputers excel in processing vast datasets gathered by sensor-laden devices, including satellites, probes, robots, and telescopes. Leveraging this data, these machines can simulate outer space conditions on Earth. Through advanced generative algorithms, they have the capability to not only recreate but also replicate artificial environments that closely resemble various patches of the universe.

- Nuclear Fusion Research – Frontier and Summit, two of the world’s most powerful supercomputers, are engaged in creating simulations to forecast energy loss and enhance performance in plasma. The project aims to contribute to the development of next-generation technology for fusion energy reactors. Mimicking the energy generation processes of the sun, nuclear fusion emerges as a promising candidate in the pursuit of abundant, sustainable, and long-term energy resources devoid of carbon emissions and radioactive waste.

- Intelligence Agencies – Government intelligence agencies employ supercomputers to monitor communication channels between private citizens and potential fraudsters. The primary requirement for these agencies is the robust numerical processing power of supercomputers, enabling them to encrypt various forms of communication, including cell phones, emails, and satellite transmissions.

Benefits of AI in Supercomputing

The integration of AI brings forth numerous advantages that substantially enhance the capabilities of these high-performance computational systems. This encompasses:

- Transforming Processing Speed: AI supercomputers surpass conventional computational speeds, pushing the limits to provide accelerated processing capabilities. Compared to standard computers, where processing rates maybe 100-1000 times slower, resulting in tasks taking numerous hours to complete, a supercomputercan accomplish the same task within a fraction of a second.

- Enhanced Automation and Operational Efficiency: Automation and efficiencycharacterize AI supercomputing. Tasks that were previously manual and time-intensive can now be automated, leading to substantial gains in operational efficiency. These robust machines are pivotal in accelerating AI training, inferencing, and optimization tasks, achieving unparalleled speeds and contributing to efficiency gains across diverse applications.

- Advancing Safety Measures: Supercomputers extend their impact beyond CGI and scientific applications, contributing to making the world a safer place. Simulations or experiments that pose challenges or extreme dangers in the real world can be conducted on a supercomputer. For instance, ensuring the functionality of nuclear weapons requires testing, a process that, without supercomputers, would involve detonating a nuclear bomb. However, these powerful computers enable engineers to achieve comparable results without exposing the world to the risks of an actual nuclear explosion.

- Advancements in Scientific Frontiers: The immense processing capabilities of supercomputers enable tasks that surpass the capacities of regular computers. AI integration in supercomputing is crucial in driving scientific breakthroughs, encompassing activities such as simulating nuclear reactions, climate modeling, and deciphering genomic sequences. The incorporation of AI expands the research horizons, allowing scientists to delve into previously deemed impossible challenges.

The benefits of integrating AI into supercomputing are transformative, unlocking unprecedented capabilities across various domains. From revolutionizing processing speed to enhancing automation and operational efficiency, AI supercomputers redefine the boundaries of computational possibilities.

“AI supercomputing has emerged as a transformative force, redefining our understanding of computational capabilities…(performing) tasks that were once considered beyond human reach.” – OmniRaza

Real World Examples: Tech

The top-performing supercomputers of today can execute simulations in a timeframe that would require a personal computer 500 years, as reported by the Partnership for Advanced Computing in Europe. The rankings provided below are based on the Top500 project, co-founded by Dongarra, which assesses the swiftness of non-distributed computer systems in solving a set of linear equations using a dense random matrix. This evaluation relies on the LINPACK Benchmark, offering an estimation of a computer’s speed in running either a single program or multiple programs.

- Frontier– Operating at the Oak Ridge National Lab in Tennessee, Frontier is the first documented supercomputer to achieve “exascale,” maintaining a computational power of 1.1 exaFLOPS. To put it simply, it has the capacity to perform a quintillion calculations per second. Constructed from 74 HPE Cray EX supercomputing cabinets, each weighing nearly 8,000 pounds, Frontier surpasses the combined power of the top seven supercomputers. The laboratory notes that the entire global population would require over four years to accomplish what Frontier can achieve in just one second.

- Fugaku– Fugaku debuted at 416 petaFLOPS, securing the world title for two consecutive years. After a software upgrade in 2020, its performance peaked at 442 petaFLOPS. Powered by the Fujitsu A64FX microprocessor, featuring 158,976 nodes, this petascale computer is named after an alternate term for Mount Fuji. It is situated at the Riken Center for Computational Science in Kobe, Japan. Fugaku is dedicated to addressing pressing global challenges, particularly focusing on climate change. Its primary mission involves accurately predicting global warming scenarios based on carbon dioxide emissions and their impact on the global population.

- Lumi– A collaboration of ten European countries has united to introduce Lumi, the fastest supercomputer in Europe. Spanning 1,600 square feet and weighing 165 tons, this machine boasts a sustained computing power of 375 petaFLOPS, with a peak performance reaching 550 petaFLOPS—equivalent to the capacity of 1.5 million laptops. Notably energy-efficient, Lumi is housed at the CSC’s data center in Kajaani, Finland, where it benefits from natural climate conditions for cooling. Operating exclusively on carbon-free hydroelectric energy, it also contributes to district heating, utilizing 20 percent of the waste heat it produces.

- Leonardo– Leonardo, situated in the CINECA data center in Bologna, Italy, stands as a petascale supercomputer. This 2,000-square-foot system is divided into three modules: the booster, data center, and front-end and service modules. Powered by an Atos BullSequana XH2000 computer equipped with over 13,800 Nvidia Ampere GPUs, Leonardo achieves peak processing speeds of 250 petaFLOPS.

Image credit: Frontier. Frontier is the first documented supercomputer to achieve “exascale”; the capacity to perform a quintillion (1 followed by 18 zeros) calculations per second.

- Summit – Summit, which claimed the title of the world’s fastest computer upon its debut in 2018, currently maintains a top speed of 200 petaFLOPS. Sponsored by the United States Department of Energy and operated by IBM under a $325 million contract, this 9,300-square-foot machine utilizes AI, material science, and genomics. The Summit supercomputer serves as a crucial tool for scientists and researchers tackling complex challenges in energy, intelligent systems, human health, and various scientific domains. Its applications span earthquake modeling, material science studies, genetic research, and the prediction of neutrino lifetimes in physics. Similar to Frontier, Summit is housed at the Oak Ridge National Laboratory in Tennessee.

- Sunway TaihuLight – The Sunway TaihuLight Computer, developed by the National Research Center of Parallel Computer Engineering and Technology and situated in Wuxi, Jiangsu, is the sixth fastest supercomputer globally. Pioneering in its achievement, it became the first supercomputer to surpass a speed of 100 PFlops. Operating at a speed of 1.45 GHz (3.06 TFlops single CPU, 105 PFLOPS LINPACK, 125 PFLOPS peak), this remarkable supercomputer is housed at the Chinese National Supercomputing Center in Wuxi City, China.

- Perlmutter – Perlmutter, an HPE (Hewlett Packard Enterprise) Cray EX supercomputer, pays homage to Saul Perlmutter, the astrophysicist from Berkeley Lab, acknowledged with the 2011 Nobel Prize in Physics for groundbreaking contributions demonstrating the accelerating expansion of the universe. Operating on the HPE Cray Shasta platform, Perlmutter is a heterogeneous system, incorporating 3,072 CPU-only and 1,792 GPU-accelerated nodes, delivering a performance of 3-4 times Cori.

- NVIDIA Selene – The NVIDIA Selene supercomputer, constructed based on the NVIDIA DGX SuperPOD™ reference architecture, provides an accelerated route to massive scale, presenting itself as a Top10-class supercomputer successfully implemented in less than a month. Factors driving Selene’s design include its adaptability as a readily available turnkey solution, the swift deployment achieved through its modular design and incremental scalability, and the tools employed for efficiently managing mission-critical mixed workloads.

- Tianhe-2A – With over 16,000 computer nodes, Tianhe-2A is the world’s most extensive deployment of Intel Ivy Bridge and Xeon Phi processors. Each node has 88 gigabytes of memory, contributing to a total memory capacity (CPU+coprocessor) of 1,375 tebibytes. Upon its deployment in 2013, Tianhe-2A was the fastest supercomputer. Developed by over 1,300 engineers and scientists, China invested 2.4 billion yuan (US$390 million) in constructing this supercomputer. Presently, it is predominantly employed for analyzing vast datasets, conducting intricate simulations, and addressing government security applications.

- Adastra – Adastra, the second-fastest system in Europe, is built using HPE and AMD technology. Its primary objective is to expedite the integration of HPC and AI workloads across various domains, encompassing climate research, astrophysics, material sciences, chemistry, new energies like fusion, and applications in biology and health. In late 2023, GENCI, CINES announced the first upgrade of Adastra, incorporating the highly innovative AMD Instinct MI300A Accelerated Processing Unit (APU).

Image credit: NVIDIA – NVIDIA Selene is one of the world’s leading supercomputers, with its adaptability benefits from its modular design and scalability making it preferable as a turnkey solution.